New deep learning model uses video to measure embryonic development

Originally published by University of Plymouth, on May 28, 2024

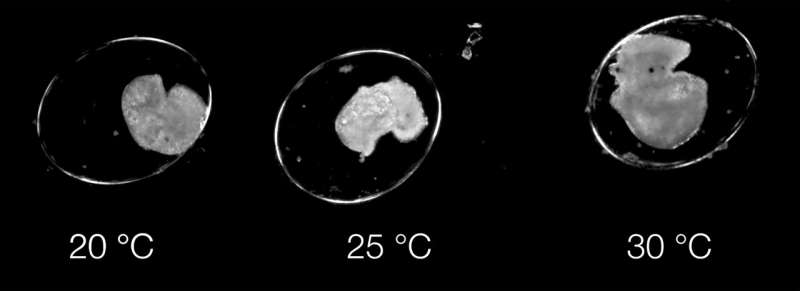

Pond snail embryos at the University of Plymouth. Credit: University of Plymouth

Research led by the University of Plymouth has shown that a new deep learning AI model can identify what happens and when during embryonic development, from video.

Published in the Journal of Experimental Biology, the study, titled "Dev-ResNet: Automated developmental event detection using deep learning," highlights how the model, known as Dev-ResNet, can identify the occurrence of key functional developmental events in pond snails, including heart function, crawling, hatching and even death.

A key innovation in this study is the use of a 3D model that uses changes occurring between frames of the video, and enables the AI to learn from these features, as opposed to the more traditional use of still images.

The use of video means features ranging from the first heartbeat, or crawling behavior, through to shell formation or hatching are reliably detected by Dev-ResNet, and has revealed sensitivities of different features to temperature not previously known.

While used in pond snail embryos for this study, the authors say the model has broad applicability across all species, and they provide comprehensive scripts and documentation for applying Dev-ResNet in different biological systems.

In future, the technique could be used to help accelerate understanding on how climate change, and other external factors, affect humans and animals.

Comments

Post a Comment